Researchers from the University of California San Diego recently built a machine learning system that predicts what a bird’s about to sing as they’re singing it.

The big idea here is real-time speech synthesis for vocal prosthesis. But the implications could go much further.

Up front: Birdsong is a complex form of communication that involves rhythm, pitch, and, most importantly, learned behaviors.

According to the researchers, teaching an AI to understand these songs is a valuable step in training systems that can replace biological human vocalizations:

While limb-based motor prosthetic systems have leveraged nonhuman primates as an important animal model, speech prostheses lack a similar animal model and are more limited in terms of neural interface technology, brain coverage, and behavioral study design.

Songbirds are an attractive model for learned complex vocal behavior. Birdsong shares a number of unique similarities with human speech, and its study has yielded general insight into multiple mechanisms and circuits behind learning, execution, and maintenance of vocal motor skill.

But translating vocalizations in real-time is no easy challenge. Current state-of-the art systems are slow compared to our natural thought-to-speech patterns.

Think about it: cutting-edge natural language processing systems struggle to keep up with human thought.

When you interact with your Google Assistant or Alexa virtual assistant, there’s often a longer pause than you’d expect if you were talking to a real person. This is because the AI is processing your speech, determining what each word means in relation to its abilities, and then figuring out which packages or programs to access and deploy.

In the grand scheme, it’s amazing that these cloud-based systems work as fast as they do. But they’re still not good enough for the purpose of creating a seamless interface for non-vocal people to speak through at the speed of thought.

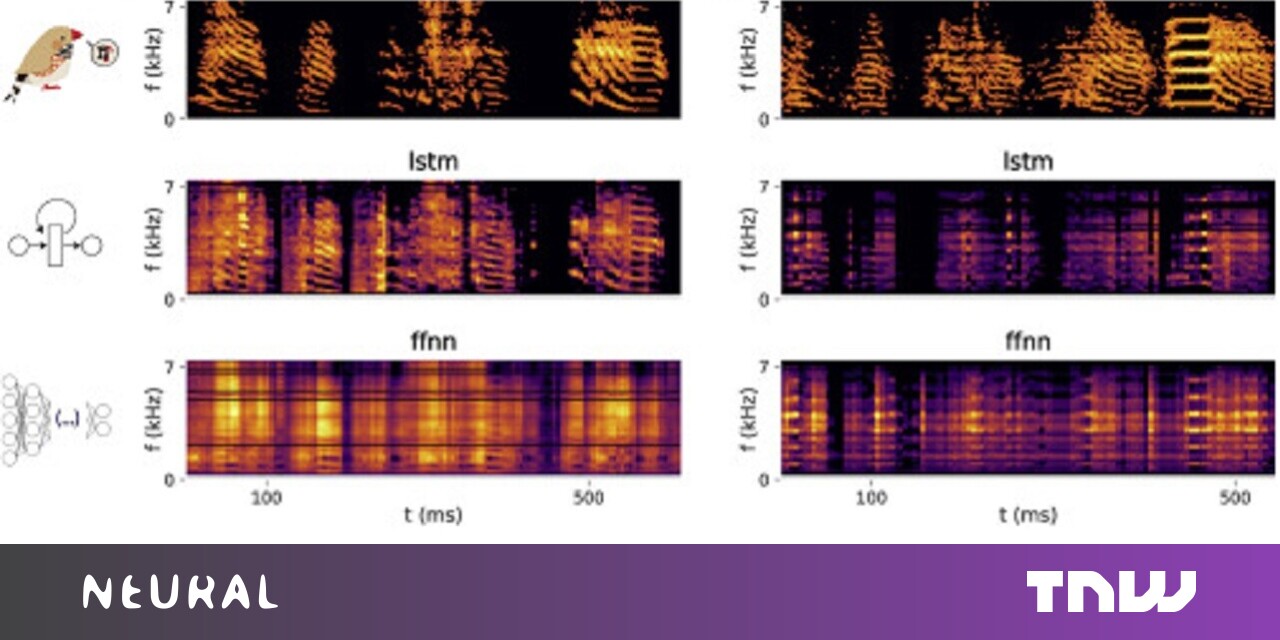

The work: First, the team implanted electrodes in a dozen bird brains (zebra finches, to be specific) and then started recording activity as the birds sang.

But it’s not enough just to train an AI to recognize neural activity as a bird sings – even a bird’s brain is far too complex to entirely map how communications work across its neurons.

So the researchers trained another system to reduce real-time songs down to recognizable patterns the AI can work with.

Quick take: This is pretty cool in that it does provide a solution to an outstanding problem. Processing birdsong in real-time is impressive and replicating these results with human speech would be a eureka moment.

But, this early work isn’t ready for primetime just yet. It appears to be a shoebox solution in that it’s not necessarily adaptable to other speech systems in its current iteration. In order to get it functioning fast enough, the researchers had to create a shortcut to speech analysis that might not work when you expand it beyond a bird’s vocabulary.

That being said, with further development this could be among the first giant technological leaps for brain computer interfaces since the deep learning renaissance of 2014.

Read the whole paper here.

"interface" - Google News

June 18, 2021 at 11:34PM

https://ift.tt/3iS2roA

Researchers created a brain interface that can sing what a bird's thinking - The Next Web

"interface" - Google News

https://ift.tt/2z6joXy

https://ift.tt/2KUD1V2

Bagikan Berita Ini

0 Response to "Researchers created a brain interface that can sing what a bird's thinking - The Next Web"

Post a Comment