Retro Tech Week In the early days of microcomputers, everyone just invented their own user interfaces, until an Apple-influenced IBM standard brought about harmony. Then, sadly, the world forgot.

In 1981, the IBM PC arrived and legitimized microcomputers as business tools, not just home playthings. The PC largely created the industry that the Reg reports upon today, and a vast and chaotic market for all kinds of software running on a vast range of compatible computers. Just three years later, Apple launched the Macintosh and made graphical user interfaces mainstream. IBM responded with an obscure and sometimes derided initiative called Systems Application Architecture, and while that went largely ignored, one part of it became hugely influential over how software looked and worked for decades to come.

One bit of IBM's vast standard described how software user interfaces should look and work – and largely by accident, that particular part caught on and took off. It didn't just guide the design of OS/2; it also influenced Windows, and DOS and DOS apps, and of pretty much all software that followed. So, for instance, the way almost every Linux desktop and GUI app works is guided by this now-forgotten doorstop of 1980s IBM documentation.

But its influence never reached one part of the software world: the Linux (and Unix) shell. Today, that failure is coming back to bite us. It's not the only reason – others lie in Microsoft marketing and indeed Microsoft legal threats, as well as the rise of the web and web apps, and of smartphones.

Culture clash

Although they have all blurred into a large and very confused whole now, 21st century softwares evolved out of two very different traditions. On one side, there are systems that evolved out of Unix, a multiuser OS designed for minicomputers: expensive machines, shared by a team or department, and used only via dumb text-only terminals on slow serial connections. At first, these terminals were teletypes – pretty much a typewriter with a serial port, physically printing on a long roll of paper. Devices with screens, glass teletypes, only came along later and at first faithfully copied the design – and limitation – of teletypes.

That evolution forced the hands of the designers of early Unix software: the early terminals didn't have cursor keys, or backspace, or modifier keys like Alt. (A fun aside: the system for controlling such keys, called Bucky bits, is another tiny part of the great legacy of Niklaus Wirth whose nickname while in California was "Bucky.") So, for instance, one of the original glass teletypes, the Lear-Siegler ADM3A, is the reason for Vi's navigation keys, and ~ meaning the user's home directory on Linux.

When you can't freely move the cursor around the screen, or redraw isolated regions of the screen, it's either impossible or extremely slow to display menus over the top of the screen contents, or have them change to reflect the user navigating the interface.

The other type of system evolved out of microcomputers: inexpensive, standalone, single-user computers, usually based on the new low-end tech of microprocessors. Mid-1970s microprocessors were fairly feeble, eight-bit things, meaning they could only handle a maximum of 64kB of RAM. One result was tiny, very simple OSes. But the other was that most had their own display and keyboard, directly attached to the CPU. It was cheaper, but it was faster. That meant video games, and that meant pressure to get a graphical display, even if a primitive one.

The first generation of home computers, from Apple, Atari, Commodore, Tandy, plus Acorn and dozens of others – all looked different, worked differently, and were totally mutually incompatible. It was the Wild West era of computing, and that was just how things were. Worse, there was no spare storage for luxuries like online help.

However, users were free to move the cursor around the screen and even edit the existing contents. Free from the limitations of being on the end of a serial line that only handled (at the most) some thousands of bits per second, apps and games could redraw the screen whenever needed. Meanwhile, even when micros were attached to bigger systems as terminals, over on Unix, design decisions that had been made to work around these limitations of glass teletypes still restricted how significant parts of the OS worked – and that's still true in 2024.

Universes collide

By the mid-1980s, the early eight-bit micros begat a second generation of 16-bit machines. In user interface design, things began to settle on some agreed standards of how stuff worked… largely due to the influence of Apple and the Macintosh.

Soon after Apple released the Macintosh, it published a set of guidelines for how Macintosh apps should look and work, to ensure that they were all similar enough to one another to be immediately familiar. You can still read the 1987 edition online [PDF].

This had a visible influence on the 16-bit generation of home micros, such as Commodore's Amiga, Atari's ST, and Acorn's Archimedes. (All right, except the Sinclair QL, but it came out before the Macintosh.) They all have reasonable graphics and sound, a floppy drive, and came with a mouse as standard. They all aped IBM's second-generation keyboard layout, too, which was very different from the original PC keyboard.

But most importantly, they all had some kind of graphical desktop – the famed WIMP interface. All had hierarchical menus, a standard file manager, copy-and-paste between apps: things that we take for granted today, but which in 1985 or 1986 were exciting and new. Common elements, such as standard menus and dialog boxes, were often reminscent of MacOS.

One of the first graphical desktops to conspicuously imitate the Macintosh OS was Digital Research's GEM. The PC version was released in February 1985, and Apple noticed the resemblance and sued, which led to PC GEM being crippled. Fortunately for Atari ST users, when that machine followed in June, its version of GEM was not affected by the lawsuit.

The ugly duckling

Second-generation, 16-bit micros looked better and worked better – all except for one: the poor old IBM PC-compatible. These dominated business, and sold in the millions, but mid-1980s versions still had poor graphics, and no mouse or sound chip as standard. They came with text-only OSes: for most owners, PC or MS-DOS. For a few multiuser setups doing stock control, payroll, accounts and other unglamorous activities, DR's Concurrent DOS or SCO Xenix. Microsoft offered Windows 1 and then 2, but they were ugly, unappealing, had few apps, and didn't sell well.

This is the market IBM tried to transform in 1987 with its new PS/2 range of computers, which set industry standards that kept going well into this century: VGA and SVGA graphics, high-density 1.4MB 3.5 inch floppy drives, and a new common design for keyboard and mouse connectors – and came with both ports as standard.

IBM also promoted a new OS it had co-developed with Microsoft, OS/2, which we looked at 25 years on. OS/2 did not conquer the world, but as mentioned in that article, one aspect of OS/2 did: part of IBM's Systems Application Architecture. SAA was an ambitious effort to define how computers, OSes and apps could communicate, and in IBM mainframe land, a version is still around. One small part of SAA did succeed, a part called Common User Access. (The design guide mentioned in that blog post is long gone, but the Reg FOSS desk has uploaded a converted version to the Internet Archive.)

CUA proposed a set of principles on how to design a user interface: not just for GUI or OS/2 apps, but all user-facing software, including text-only programs, even on mainframe terminals. CUA was, broadly, IBM's version of Apple's Human Interface Guidelines – but cautiously, proposed a slightly different interface, as it was published around the time of multiple look-and-feel lawsuits, such as Apple versus Microsoft, Apple versus Digital Research, and Lotus versus Paperback.

CUA advised a menu bar, with a standard set of single-word menus, each with a standard basic set of options, and standardized dialog boxes. It didn't assume the computer had a mouse, so it defined standard keystrokes for opening and navigating menus, as well as for near-universal operations such opening, saving and printing files, cut, copy and paste, accessing help, and so on.

There is a good summary of CUA design in this 11-page ebook, Principles of UI Design [PDF]; it's from 2007 and has a slight Windows XP flavor to the pictures.

CUA brought SAA-nity to DOS

Windows 3.0 was released in 1990 and precipated a transformation in the PC industry. For the first time, Windows looked and worked well enough that people actually used it from choice. Windows 3's user interface – it didn't really have a desktop as such – was borrowed directly from OS/2 1.2, from Program Manager and File Manager, right down to the little fake-3D shaded minimize and maximize buttons. Its design is straight by the CUA book.

Even so, Windows took a while to catch on. Many PCs were not that high-spec. If you had a 286 PC, it could use a megabyte or more of memory. If you had a 386 with 2MB of RAM, it could run in 386 Enhanced Mode, and not merely multitask DOS apps but also give them 640kB each. But for comparison, this vulture's work PC in 1991 only had 1MB of RAM, and the one before that didn't have a mouse, like many late-1980s PCs.

As a result, DOS apps continued to be the best sellers. The chart-topping PC apps of 1990 were WordPerfect v5.1 and Lotus 1-2-3 v2.2.

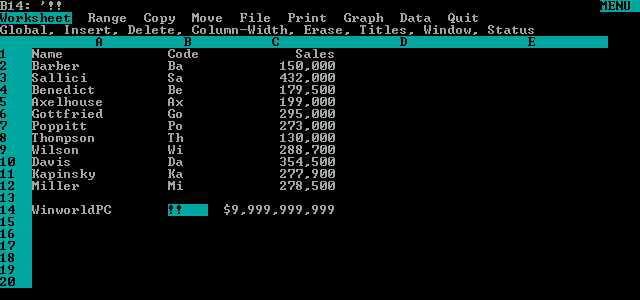

Lotus 1-2-3 was the original PC killer app and is a good example of a 1980s user interface. It had a two-line menu at the top of the screen, opened with the slash key. File was the fifth option, so to open a file, you pressed /, f, then r for Retrieve.

Microsoft Word for DOS also had a two-line menu, but at the bottom of the screen, with file operations under Transfer. So, in Word, the same operation used the Esc key to open the menus, then t, then l for Load.

Pre-WordPerfect hit word-processor WordStar used Ctrl plus letters, and didn't have a shortcut for opening a file, so you needed Ctrl+k, d, then pick a file and press d again to open a Document. For added entertainment, different editions of WordStar used totally different kestrokes: WordStar 2000 had a whole new interface, as did WordStar Express, known to many Amstrad PC owners as WordStar 1512.

The word processor that knocked WordStar off the Number One spot was the previous best-selling version of WordPerfect, 4.2. WordPerfect used the function keys for everything, to the extent that its keyboard template acted as a sort of copy-protection: it was almost unusable without one. (Remarkably, they are still on sale.) To open a file in WordPerfect, you pressed F7 for the full-screen File menu, then 3 to open a document. The big innovation in WordPerfect 5 was that, in addition to the old UI, it also had CUA-style drop-down menus at the top of the screen, which made it much more usable. For many fans, WordPerfect 5.1 remains the classic version to this day.

Every main DOS application had its own, unique user interface, and nothing was sacred. While F1 was Help in many programs, WordPerfect used F3 for that. Esc was often some form of Cancel, but WordPerfect used it to repeat a character.

With every app having a totally different UI, even knowing one inside-out didn't help in any other software. Many PC users mainly used one program and couldn't operate anything else. Some software vendors encouraged this, as it helped them sell companion apps with compatible interfaces – for example, WordPerfect vendors SSI also offered a database called DataPerfect, while Lotus Corporation offered a 1-2-3 compatible word processor, Lotus Manuscript.

CUA came to this chaotic landscape and imposed a sort of ceasefire. Even if you couldn't afford a new PC able to run Windows well, you could upgrade your apps with new versions with this new, standardized UI. Microsoft Word 5.0 for DOS had the old two-line menus, but Word 5.5 had the new look. (It's now a free download and the curious can try it.) WordPerfect adopted the menus in addition to its old UI, so experienced users could just keep going while newbies could explore and learn their way around gradually.

Borland acquired the Paradox database and grafted on a new UI based on its TurboVision text-mode windowing system, loved by many from its best-selling development tools – as dissected in this excellent recent retrospective, The IDEs we had 30 years ago… and we lost.

The chaos creeps back

IBM's PS/2 range brought better graphics, but Windows 3 was what made them worth having, and its successor Windows 95 ended up giving the PC a pretty good GUI of its own. In the meantime, though, IBM's CUA standard brought DOS apps into the 1990s and caused vast improvements in usability: what IBM's guide called a "walk up and use" design, where someone who has never seen a program before can operate it first time.

The impacts of CUA weren't limited to DOS. The first ever cross-Unix graphical desktop, the Open Group's Common Desktop Environment uses a CUA design. Xfce, the oldest Linux desktop of all was modelled on CDE, so it sticks to CUA, even now.

Released one year after Xfce, KDE was based on a CUA design, but its developers seem to be forgetting that. In recent versions, some components no longer have menu bars. KDE also doesn't honour Windows-style shortcuts for window management and so on. GNOME and GNOME 2 were largely CUA-compliant, but GNOME 3 famously eliminated most of that… which opened up window of opportunity for Linux Mint, which methodically put that UI back.

For the first decade of graphical Linux desktops environments, they all looked and worked pretty much like Windows. The first version of Ubuntu was released in 2004, arguably the first decent desktop Linux distro that was free of charge, which put GNOME 2 in front of a lot of new users.

Microsoft, of course, noticed. The Reg had already warned of future patent claims against Linux in 2003. In 2006, it began, with fairly general statements. In 2007, Microsoft started counting the patents that it claimed Linux desktops infringed, although it was too busy to name them.

Over a decade ago, The Reg made the case that the profusion of new, less-Windows-like desktops such as GNOME 3 and Unity were a direct result of this. Many other new environments have also come about since then, including a profusion of tiling window managers – the Arch wiki lists 14 for X.org and another dozen for Wayland.

These are mostly tools for Linux (and, yes, other FOSS Unix-like OS) users juggling multiple terminal windows. The Unix shell is a famously rich environment: hardcore shell users find little reason to leave it, except for web browsing.

And this environment is the one place where CUA never reached. There are many reasons. One is that tools such as Vi and Emacs were already well-established by the 1980s, and those traditions continued into Linux. Another is, as we said earlier, that tools designed for glass-teletype terminals needed different UIs, which have now become deeply entrenched.

Aside from Vi and Emacs, most other common shell editors don't follow CUA, either. The popular Joe uses classic WordStar keystrokes. Pico and Nano have their own.

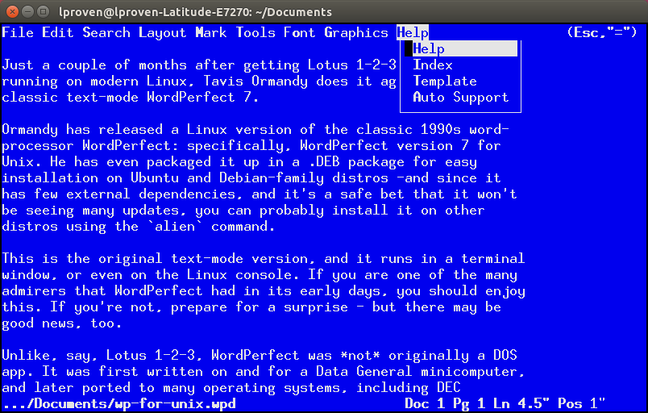

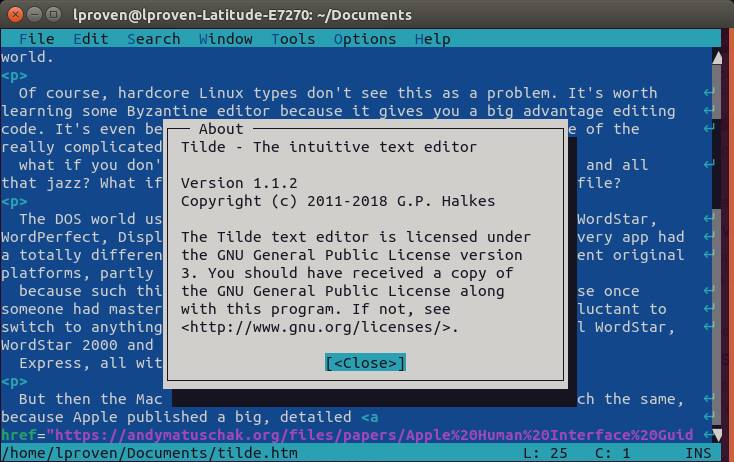

It's not that they don't exist. They do, they just never caught on, despite plaintive requests. Midnight Commanders' mcedit is a stab in the general direction. The FOSS desk favourite Tilde is CUA, and as 184 comments imply, that's controversial.

The old-style tools could be adapted perfectly well. A decade ago, a project called Cream made Vim CUA-compliant; more recently, the simpler Modeless Vim delivers some of that. GNU Emacs' built-in cua-mode does almost nothing to modify the editor's famously opaque UI, but ErgoEmacs does a much better job. Even so, these remain tiny, niche offerings.

The problem is that as developers who grew up with these pre-standardization tools, combined with various keyboardless fondleslabs where such things don't exist, don't know what CUA means. If someone's not even aware there is a standard, then the tools they build won't follow it. As the trajectories of KDE and GNOME show, even projects that started out compliant can drift in other directions.

This doesn't just matter for grumpy old hacks. It also disenfranchizes millions of disabled computer users, especially blind and visually-impaired people. You can't use a pointing device if you can't see a mouse pointer, but Windows can be navigated 100 per cent keyboard-only if you know the keystrokes – and all blind users do. Thanks to the FOSS NVDA tool, there's now a first-class screen reader for Windows that's free of charge.

Most of the same keystrokes work in Xfce, MATE and Cinnamon, for instance. Where some are missing, such as the Super key not opening the Start menu, they're easily added. This also applies to environments such as LXDE, LXQt and so on.

Indeed, as we've commented before, the Linux desktop lacks diversity of design, but where you find other designs, the price is usually losing the standard keyboard UI. This is not necessary or inevitable: for instance, most of the CUA keyboard controls worked fine in Ubuntu's old Unity desktop, despite its Mac-like appearance. It's one of the reasons we still like it.

Menus bars, dialog box layouts, and standard keystrokes to operate software are not just some clunky old 1990s design to be casually thrown away. They were the result of millions of dollars and years of R&D into human-computer interfaces, a large-scale effort to get different types of computers and operating systems talking to one another and working smoothly together. It worked, and it brought harmony in place of the chaos of the 1970s and 1980s and the early days of personal computers. It was also a vast step forward in accessibility and inclusivity, opening computers up to millions more people.

Just letting it fade away due to ignorance and the odd traditions of one tiny subculture among computer users is one of the biggest mistakes in the history of computing.

Footnote

Yes, we didn't forget Apple kit. MacOS comes with the VoiceOver screen reader built in, but it imposes a whole new, non-CUA interface, so you can't really use it alongside a pointing device as Windows users can. As for VoiceOver on Apple fondleslabs, we don't recommend trying it. For a sighted user, it's the 21st century equivalent of setting the language on a mate's Nokia to Japanese or Turkish. ®

"interface" - Google News

January 24, 2024 at 04:30PM

https://ift.tt/CbRXWin

The rise and fall of the standard user interface - The Register

"interface" - Google News

https://ift.tt/B8Vi7x5

https://ift.tt/aVZpGLe

Bagikan Berita Ini

0 Response to "The rise and fall of the standard user interface - The Register"

Post a Comment