These days, display interfaces have become so impressive that 16K resolution is no longer a pipe dream!

Key Takeaways

- From old-school composite video to modern HDMI and DisplayPort, the monitor industry has evolved significantly over the years.

- Each display interface upgrade brought better image quality and higher resolution capabilities, catering to the demand for stunning visuals.

- While older interfaces like VGA and DVI have paved the way, HDMI and DisplayPort now dominate the market, offering audio and video in one connection.

From buttery-smooth 480Hz displays to ultra-sharp 4K OLED panels, monitors have come a long way over the last couple of decades. With premium gaming monitors rocking some amazing features like adaptive sync and HDR, it’s easy to spend thousands of dollars on a monitor if you’re a connoisseur of gorgeous visuals.

While hardware manufacturers deserve a lot of credit for the advancements in the monitor industry, the importance of the newer display interfaces cannot be understated. In this article, we’ll take a walk down memory lane and take a look at the history of popular display interfaces over the years.

Composite video

It all started with the yellow plug connector

The “yellow plug” composite video cable is a lot older than you may believe. Developed in the mid-1950s, the composite video interface predates the first-ever PC by almost two decades. Since composite RCA cables could only transmit display signals, you had to use a set of red and white cables for stereo audio.

Although it didn’t take long for the composite video interface to go mainstream, it had several drawbacks. Besides their low maximum resolution, composite video cables could only carry analog signals, which are prone to signal degradation and interference. The fact that it mixed the luminance (brightness) and chrominance (color) signals also meant a lot of the visual fidelity was lost due to the composite RCA. Plus, the interlaced 480i resolution left a lot to be desired.

S-Video

A step-up in terms of image quality, but not quite there yet

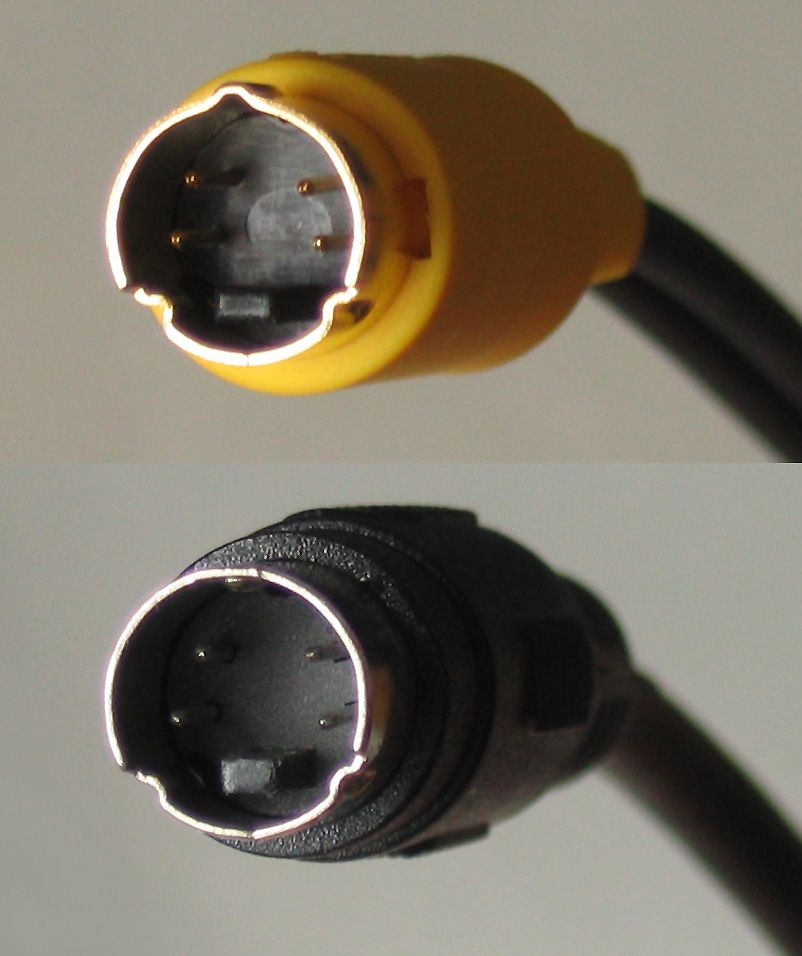

S-Video, or Separate Video, was a display interface released in the late 70s to provide better image quality than the prevailing composite RCA. S-Video had separate channels for the luminance and chrominance signals, providing a major leap in terms of visual quality. Soon, consoles, Super-VHS, and TVs began featuring S-Video ports instead of the yellow plug connector.

Like its predecessor, S-Video was also capped at 480i resolution. As such, a better interface was required to meet the growing demand for higher-resolution media.

SCART

Short for Syndicat des Constructeurs d'Appareils Radiorécepteurs et Téléviseurs

SCART, which shares its name with the organization that created it, was a futuristic connector that packed several useful features. Released in 1976, SCART was one of the first connectors that could transmit both audio and video signals, a feature that wasn't seen in other interfaces until the launch of HDMI in 2002. Besides composite video signals, the huge 21-pin SCART cable could also carry RGB signals, allowing it to display better colors. Not to mention, it supported bidirectional communication, allowing you to create a daisy-chain setup for your AV equipment.

Unfortunately, SCART never gained much traction internationally, though it was one of the most popular video interfaces in Europe for a long time.

Component Video

Which took splitting signals to a whole new level

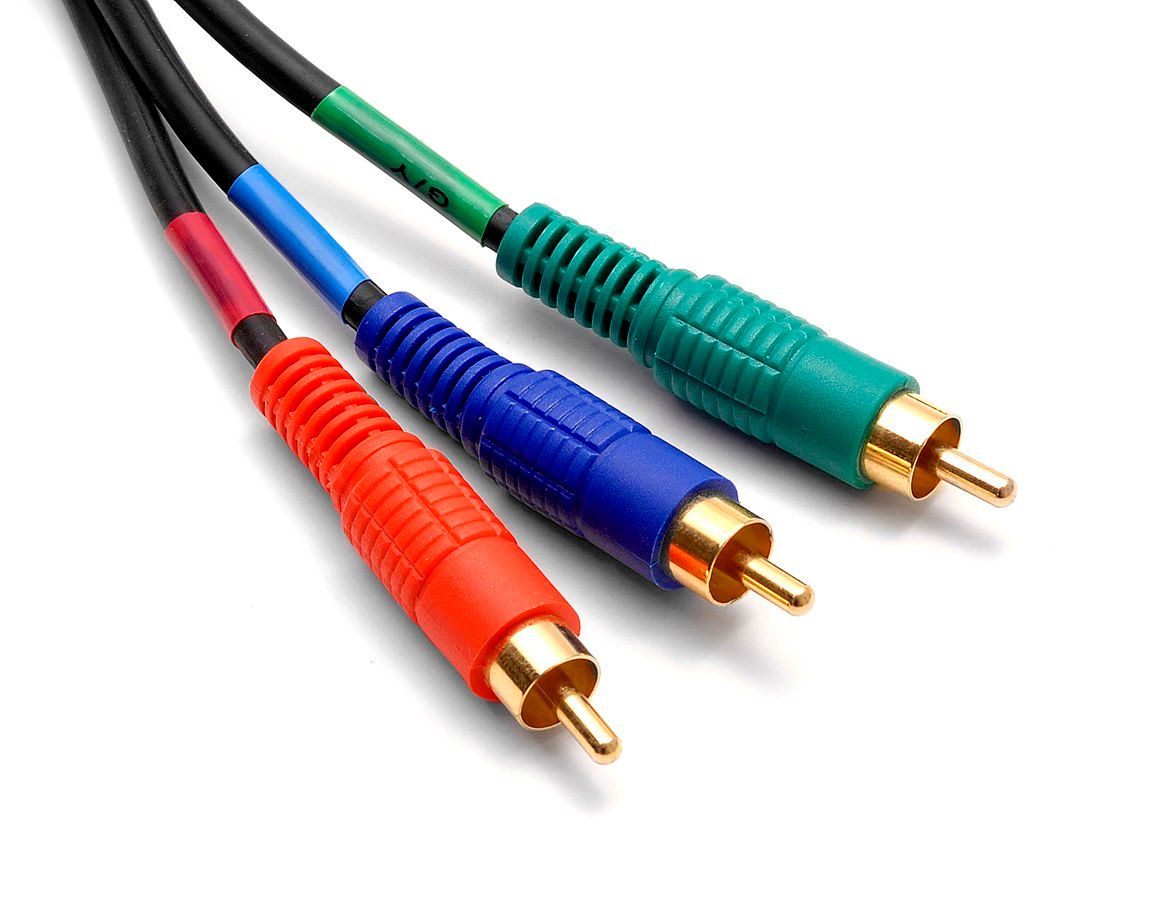

Although the composite video "yellow cable" was usually accompanied by two other cables, they were only used for transmitting audio signals. Meanwhile, the component RCA interface, which debuted in the early 90s, required you to plug three separate cables to send the display signals to your television. But the extra effort was worth it; by splitting the display signals into the Y, Pb, and Pr cables, the newer interface could output richer colors. It also raised the max resolution limit to 1080p, making it possible for users to enjoy 1080p videos.

That said, component RCA was a display standard for televisions and other consumer products, while PCs were still relatively niche. Since it was never adopted as the industry-wide standard for PC monitors, VGA emerged as the display interface for computers.

VGA

This aging interface can still be seen on certain devices

Switching gears from TVs to PC monitors, while the RCA and S-video cables were duking it on the television side, the PC industry barely had decent display interfaces. IBM computers were equipped with either the MDA, CGA, or EGA interfaces, and each one had its fair share of issues. The breakthrough didn’t occur until 1987, when IBM released the Video Graphics Array (VGA) interface for its PS/2 PCs.

Utilizing a D-sub connector, the VGA interface could transmit analog signals at 1080p on top of supporting a 16-bit color depth. The new interface was well-received, with IBM quickly integrating it into the rest of its PC lineup. By the 90s, VGA had blown up in popularity, causing other computer manufacturers to begin adding VGA connectors to their systems. VGA can still be found on a few products today, a testament to its popularity.

DVI

Finally, the industry moved away from analog signals

While VGA and composite RCA were pretty impressive technologies, they continued to use analog signals. So, they were susceptible to distortion, with issues like ghosting and signal noise affecting the image quality.

On the other hand, the Digital Visual Interface (DVI) was capable of carrying digital signals, making it superior to the older analog standards. Additionally, DVI provided much sharper images than VGA, with the dual-link DVI variants further increasing the bandwidth and supporting higher resolutions. Unfortunately, DVI enjoyed a much shorter time in the spotlight as the biggest rival, HDMI, debuted within three years of its release.

HDMI

Bringing audio and video signals to your monitor

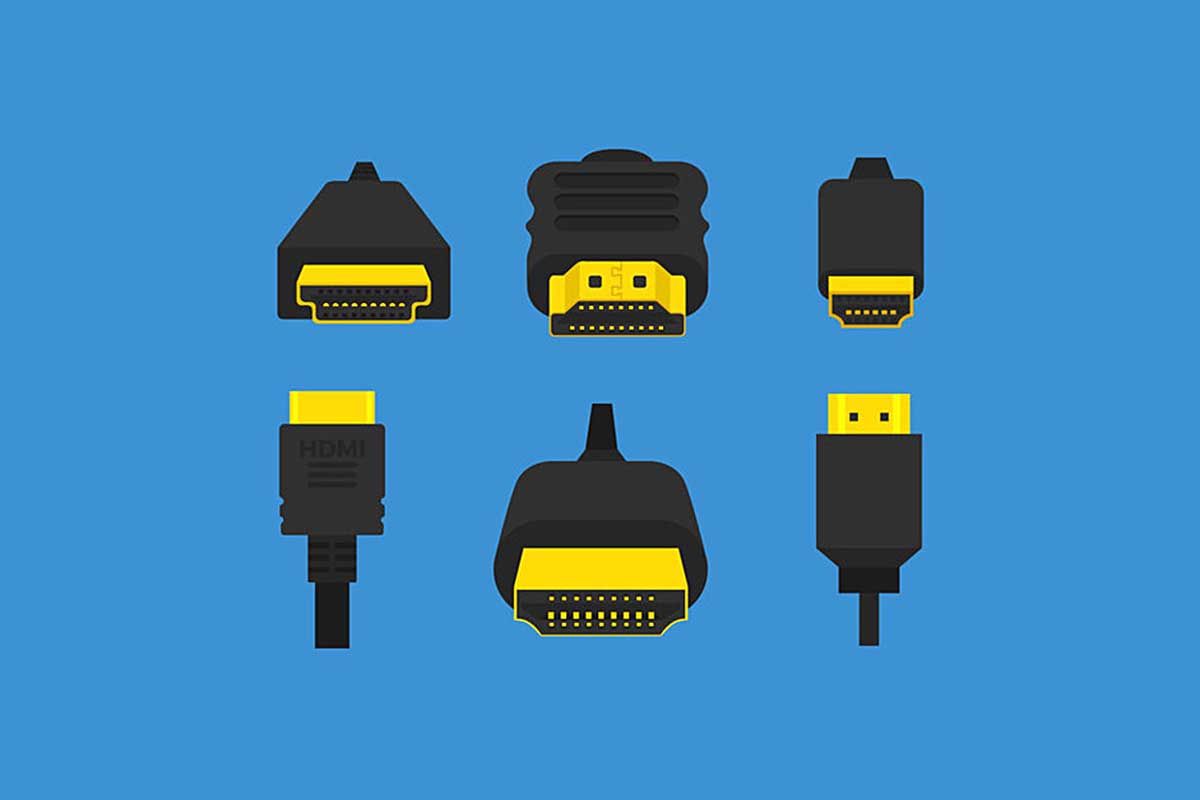

Up until this point, there wasn’t a fixed standard for display interfaces. As such, many big-name brands, including Sony, Toshiba, and Panasonic, banded together to develop High-Definition Multimedia Interface (HDMI). First released in 2002, HDMI quickly became an industry-wide interface powering TVs, PC monitors, Blu-ray players, and everything in between.

One of the many features of HDMI was its ability to transmit audio and video signals, which eliminated the need for multiple audio cables. Although the plethora of updates to HDMI made its naming scheme an utter hogwash, each successive iteration of the interface added new capabilities. Even two decades after its release, HDMI remains the most popular display standard, though it frequently trades blows with its performance-oriented rival, DisplayPort.

Everything you need to know about HDMI standards and connectors

HDMI or High Definition Multimedia Interface is one of the most commonly used audio/video interfaces. Read on to know more about it.DisplayPort

The PC enthusiast’s display interface

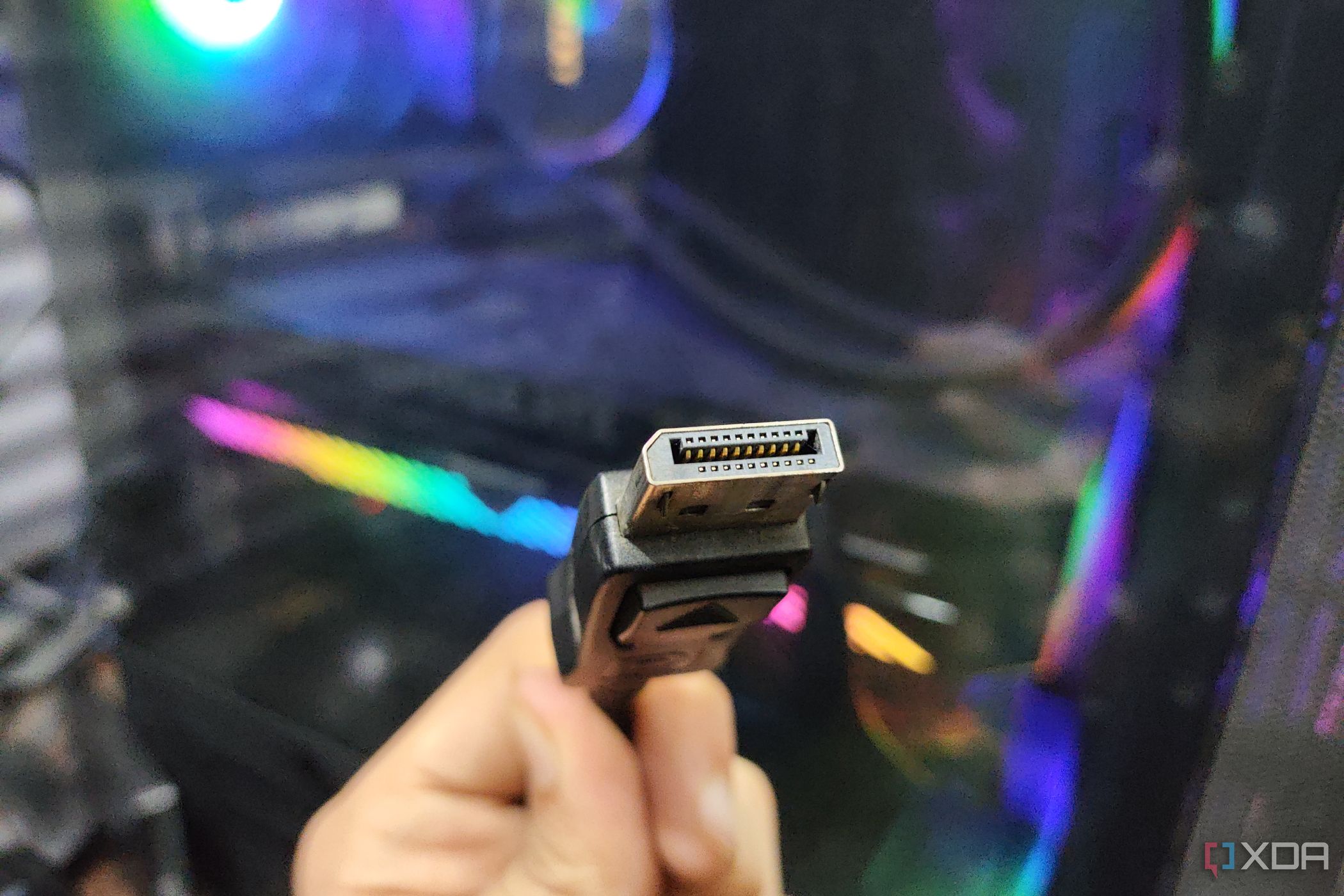

Released by the Video Electronics Standard Association (VESA) in May 2006, the DisplayPort interface was designed to replace the aging VGA and DVI standards. Unlike HDMI, which was meant for general consumer products, DisplayPort was created specifically for PC monitors, with computer enthusiasts as its target audience. Over the years, the interface was frequently updated by VESA, and every update pushed the resolution, refresh rate, and bandwidth to new heights.

Soon, the Apple ecosystem jumped on the DisplayPort bandwagon. Mini DisplayPort, a smaller version of the interface, was unveiled by Apple in 2008. For almost a decade, Apple’s Macbooks used Mini DisplayPort for connecting to external displays. After 2016, Apple began replacing the Mini DisplayPort with USB Type-C connectors. Since USB Type-C was compatible with the DisplayPort interface, laptop manufacturers removed the HDMI connector in favor of the new USB standard. When the ultra-fast Thunderbolt 3 technology became compatible with USB Type-C, there was no turning back.

These days, HDMI and DisplayPort have improved to the point where they share many features, and it's possible to find both ports on televisions and monitors.

DisplayPort vs HDMI: Which one should I use?

Not sure whether you should go with DisplayPort or HDMI? Here's everything you need to know about the two display interfacesDisplay interfaces in 2024

And here we are, nearly 70 years since the release of the composite RCA technology. From the rudimentary composite RCA, it’s been a long journey to the era of HDMI and DisplayPort.

While there were many complications along the way (with the terrible naming scheme of HDMI still being an issue), we’re finally at an age where visual fidelity isn’t held back by underdeveloped display interfaces. After all, had monitors stuck with DVI, or god forbid, the VGA interface, it would have been impossible for even the best GPUs to display games at decent resolutions and playable refresh rates.

"interface" - Google News

February 26, 2024 at 11:00PM

https://ift.tt/yqNXAxp

History of display interfaces: The journey from composite video to HDMI and DisplayPort - XDA Developers

"interface" - Google News

https://ift.tt/VDjxd6f

https://ift.tt/LvsTJXg

Bagikan Berita Ini

0 Response to "History of display interfaces: The journey from composite video to HDMI and DisplayPort - XDA Developers"

Post a Comment